The Engineer

Focus: The Code

I started in bioengineering, which taught me to treat constraints as design inputs and to turn ambiguity into measurable systems.

Product leadership for healthcare-grade AI systems built to earn trust under pressure.

Alignment-minded product leadership for high-stakes AI teams.

I help healthcare and AI teams ship systems that still work when reality hits.

I help teams turn intent into daily operating reality: clinical evals tied to patient risk, clear decision ownership, and recovery paths that work at 3am. I map failure modes to real-world impact, design escalation paths that leave evidence, and build review loops clinicians will actually use.

Quick start

Mobile quick start

Clear paths for the most common intents on a phone: contact, proof, and how the work runs.

Patient reach

100M+ patient–clinician connections across clinical workflows

Audit-ready

Policy checks, approvals, and overrides leave evidence you can show

Incident learning

Postmortems feed evals and guardrail updates with clear owners

Proof points

The numbers and outcomes that back the work.

100M+ patient–clinician connections across clinical workflows

Policy checks, approvals, and overrides leave evidence you can show

Postmortems feed evals and guardrail updates with clear owners

The Proof

A quick scan of the teams and outcomes I have led.

Product leadership

Scaling clinical communication with safety rails.

Platform strategy

Consumer-directed health experiences with clear escalation paths.

Founder

Financial immunization for doctors with transparent guardrails.

Choose a trail

Follow the thread that fits your role.

Common triggers

Short signals that show a system needs binding.

Methodology

The research practice behind my product decisions.

My product work is grounded in Ethotechnics—applied research on decision quality, escalation paths, and reliable recovery. In plain terms: define how a system should behave under stress, then build the controls to make that true.

I evaluate systems by their failure modes: how quickly issues are detected, who can intervene, what patient risk is created, and whether the organization learns fast enough to prevent repeats.

Ethotechnics

Full-Stack Context

Three lenses that connect my engineering roots to product and systems leadership.

The Engineer

Focus: The Code

I started in bioengineering, which taught me to treat constraints as design inputs and to turn ambiguity into measurable systems.

The Founder

Focus: The Product

I build tools that survive contact with operations—pairing ambition with ownership, instrumentation, and accountability.

The Theorist

Focus: The System

I study how institutions allocate time, delay, and decision authority—and translate that into decision rights, eval plans, and escalation maps teams can run.

Writing

Essays, notes, and audits on building trustworthy systems.

Browse by topic

Latest essays

"We condemn the excesses" isn’t an apology, it’s a tactic. From Amritsar 1919 to Minneapolis 2026, discover how governments use condemnation to protect their power, delay accountability, and ensure the system survives its own violence.

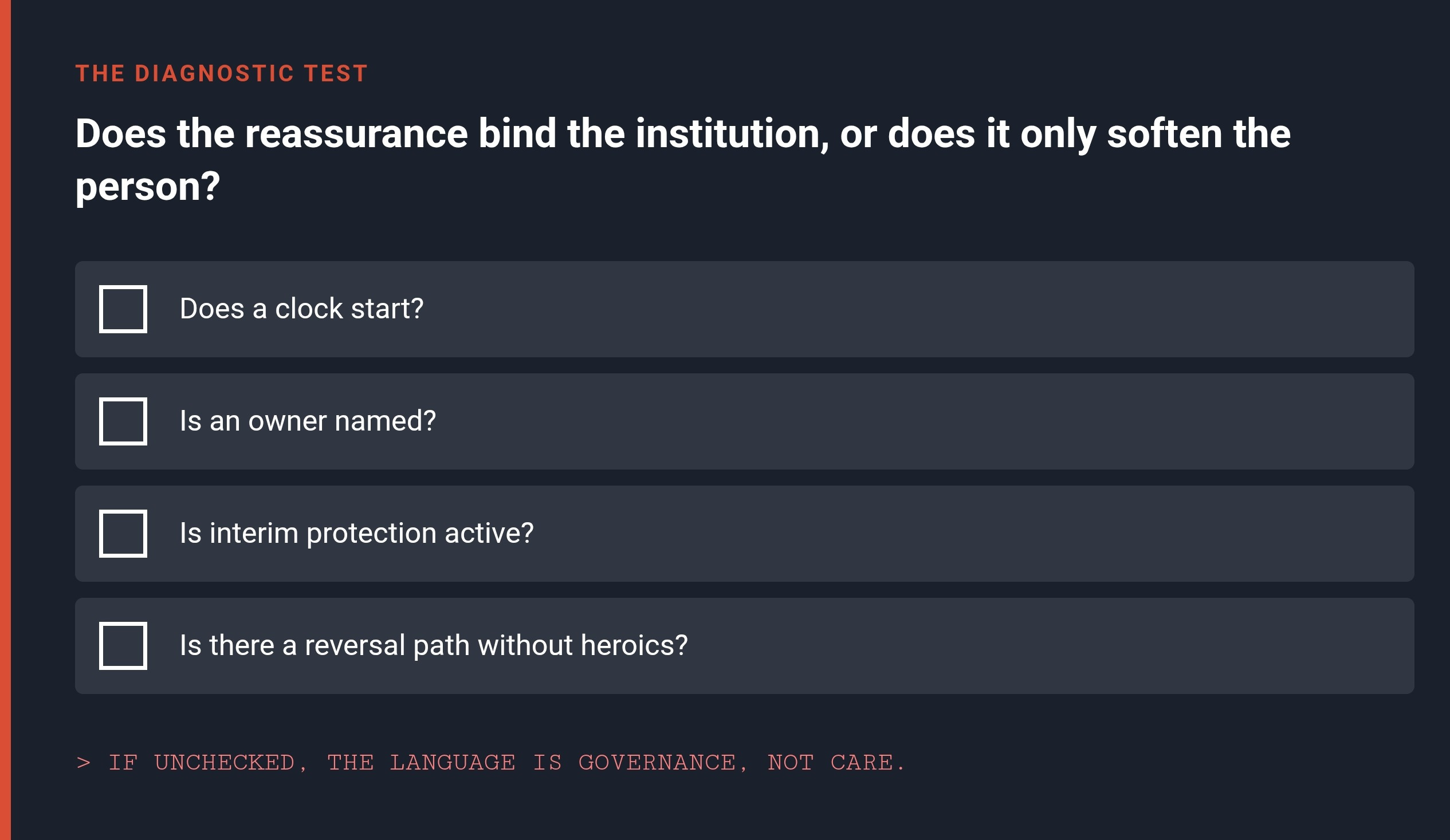

Why “we care” substitutes for obligation—and how delay gets disguised as kindness.

People are not becoming inherently dishonest, lazy, or cynical. They are becoming game-theoretically optimal for the environment they have been placed in.

We are living through a divergence between rights and remedies. If a system is "95% accurate" but concentrates errors on the vulnerable, fairness metrics are irrelevant. Here is a better way to measure justice.

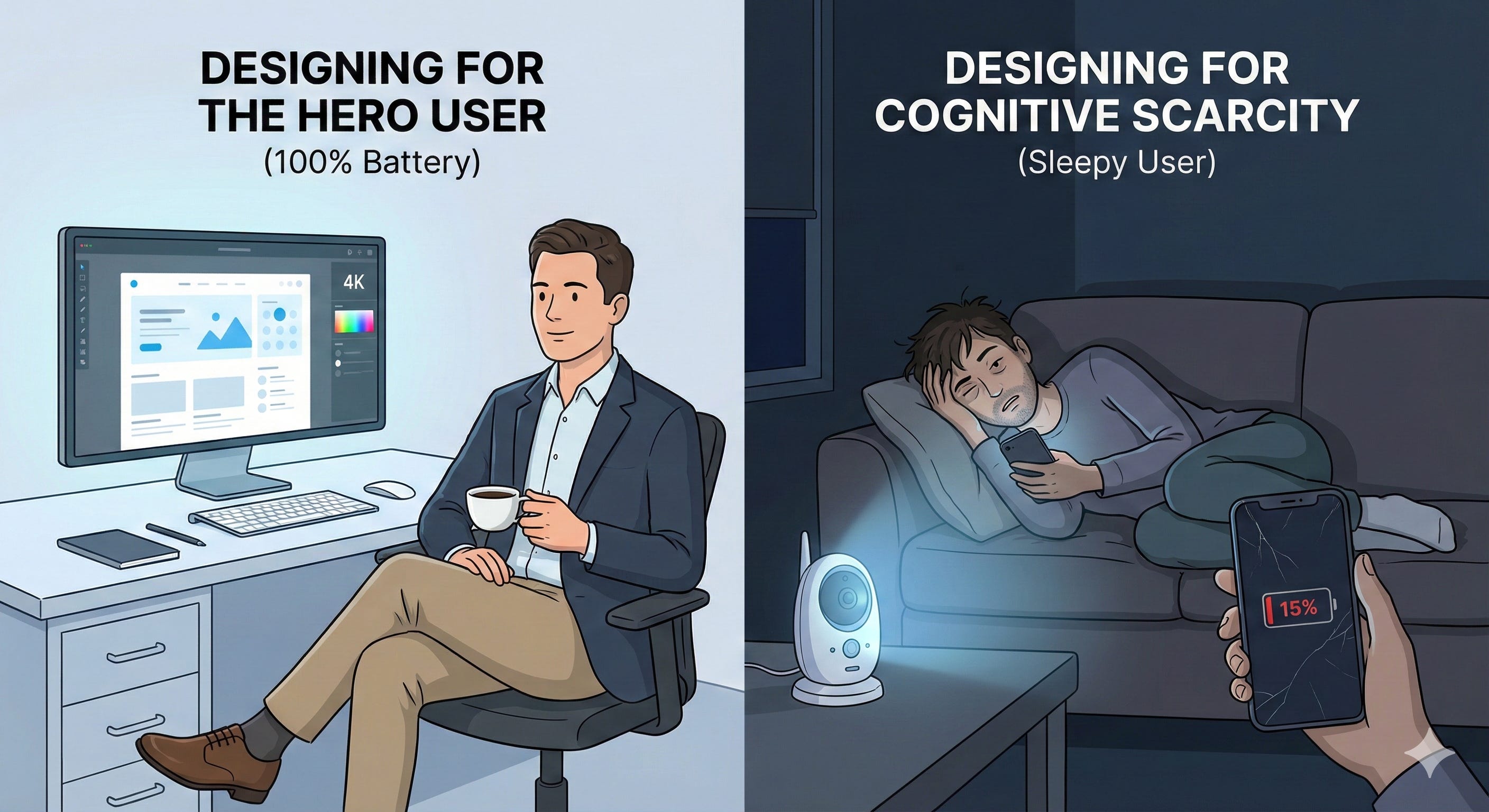

Stop designing for the idealized "Hero User." Learn how to build resilient interfaces that work when your user is stressed, tired, and operating on 15% battery.

Resilience is a subsidy we pay to cover the cost of structural failure